개요

월리 이미지를 찾는 실험입니다.

- 데이터셋 : https://www.kaggle.com/kairess/find-waldo

- 참고소스 : https://github.com/kairess/find_waldo/blob/master/train.ipynb

구현

import numpy as np

import matplotlib.pyplot as plt

import matplotlib.patches as patches

import keras.layers as layers

import keras.optimizers as optimizers

from keras.models import Model, load_model

from keras.utils import to_categorical

from keras.callbacks import LambdaCallback, ModelCheckpoint, ReduceLROnPlateau

import tensorflow as tf

import seaborn as sns

from PIL import Image

from skimage.transform import resize

import threading, random, os환경확인

Tensorflow 버전과 GPU가 잘 로드되었는지 확인해보도록 한다.

gpus = tf.config.experimental.list_logical_devices('GPU')

print('>>> Tensorflow Version: {}'.format(tf.__version__))

print('>>> Load GPUS: {}'.format(gpus))>>> Tensorflow Version: 2.1.4

>>> Load GPUS: [LogicalDevice(name='/device:GPU:0', device_type='GPU')]

BASE_DIR = os.getcwd()

DATASET_DIR = os.path.join(BASE_DIR, 'datasets')numpy 데이터로 만들어져 있는 이미지를 로드해보자.

로드한 이미지에 대하여 255.로 나누어서 이미지를 normalize (0 ~ 1) 사이 값이 오도록 하자

모델 학습시 255 상태로 연산을 하면 연산 범위가 넓어지기때문에 0 ~ 1 사이로 줄이는 과정이라고 보면 된다.

imgs = np.load(os.path.join(DATASET_DIR, 'imgs_uint8.npy'), allow_pickle=True).astype(np.float32) / 255.

labels = np.load(os.path.join(DATASET_DIR, 'labels_uint8.npy'), allow_pickle=True).astype(np.float32) / 255.

waldo_sub_imgs = np.load(os.path.join(DATASET_DIR, 'waldo_sub_imgs_uint8.npy'), allow_pickle=True) / 255.

waldo_sub_labels = np.load(os.path.join(DATASET_DIR, 'waldo_sub_labels_uint8.npy'), allow_pickle=True) / 255.로드한 numpy 이미지 데이터에 대하여 행렬이 어떤 형태로 되어있는지 출력해보자

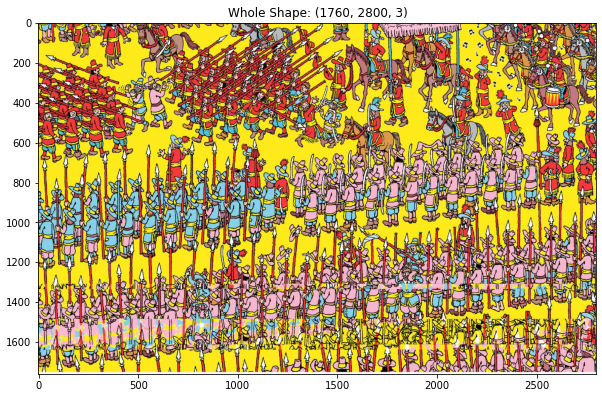

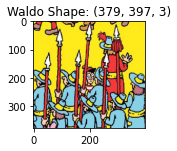

전체 이미지에 대해서는 세로가 1760px, 가로가 2800px, 채널 (RGB) 3채널이며

월리만 있는 이미지에 대해서는 세로가 370px, 가로가 370px, 채널 (RGB) 3채널인 것을 알 수 있다.

imgs[0].shape, waldo_sub_imgs[0].shape((1760, 2800, 3), (379, 397, 3))

이미지 시각화

print('>>> Image Visualization')

plt.figure(figsize=(10, 10))

plt.title("Whole Shape: {}".format(imgs[0].shape))

plt.imshow(imgs[0])

plt.figure(figsize=(2, 2))

plt.title("Waldo Shape: {}".format(waldo_sub_imgs[0].shape))

plt.imshow(waldo_sub_imgs[0])

plt.show()>>> Image Visualization

배치 이터레이터 (iterator) 생성

랜덤으로 이미지를 크롭하여 데이터셋을 많이 생성해주도록 한다.

PANNEL_SIZE = 224

class BatchIndices(object):

"""

Generates batches of shuffled indices.

# Arguments

n: number of indices

bs: batch size

shuffle: whether to shuffle indices, default False

"""

def __init__(self, n, bs, shuffle=False):

self.n,self.bs,self.shuffle = n,bs,shuffle

self.lock = threading.Lock()

self.reset()

def reset(self):

self.idxs = (np.random.permutation(self.n)

if self.shuffle else np.arange(0, self.n))

self.curr = 0

def __next__(self):

with self.lock:

if self.curr >= self.n: self.reset()

ni = min(self.bs, self.n-self.curr)

res = self.idxs[self.curr:self.curr+ni]

self.curr += ni

return res

# 이터레이터 확인

sample_train = imgs[:100]

total_count = sample_train.shape[0]

batch_size = 10

print('>>> No Shuffle')

idx_gen = BatchIndices(total_count, batch_size, False)

print(idx_gen.__next__())

print(idx_gen.__next__())

print(idx_gen.__next__())

print(' ')

print('>>> Shuffle')

idx_gen = BatchIndices(total_count, batch_size, True)

print(idx_gen.__next__())

print(idx_gen.__next__())

print(idx_gen.__next__())>>> No Shuffle

[0 1 2 3 4 5 6 7 8 9]

[10 11 12 13 14 15 16 17]

[0 1 2 3 4 5 6 7 8 9]

>>> Shuffle

[ 1 4 16 6 11 12 2 0 14 5]

[ 9 7 15 3 17 8 13 10]

[10 16 0 15 1 14 2 7 13 17]

class segm_generator(object):

"""

Generates batches of sub-images.

# Arguments

x: array of inputs

y: array of targets

bs: batch size

out_sz: dimension of sub-image

train: If true, will shuffle/randomize sub-images

waldo: If true, allow sub-images to contain targets.

"""

def __init__(self, x, y, bs=64, out_sz=(224,224), train=True, waldo=True):

self.x, self.y, self.bs, self.train = x,y,bs,train

self.waldo = waldo

self.n = x.shape[0]

self.ri, self.ci = [], []

for i in range(self.n):

ri, ci, _ = x[i].shape

self.ri.append(ri), self.ci.append(ci)

self.idx_gen = BatchIndices(self.n, bs, train)

self.ro, self.co = out_sz

self.ych = self.y.shape[-1] if len(y.shape)==4 else 1

def get_slice(self, i,o):

start = random.randint(0, i-o) if self.train else (i-o)

return slice(start, start+o)

def get_item(self, idx):

slice_r = self.get_slice(self.ri[idx], self.ro)

slice_c = self.get_slice(self.ci[idx], self.co)

x = self.x[idx][slice_r, slice_c]

y = self.y[idx][slice_r, slice_c]

if self.train and (random.random()>0.5):

y = y[:,::-1]

x = x[:,::-1]

if not self.waldo and np.sum(y)!=0:

return None

return x, to_categorical(y, num_classes=2).reshape((y.shape[0] * y.shape[1], 2))

def __next__(self):

idxs = self.idx_gen.__next__()

items = []

for idx in idxs:

item = self.get_item(idx)

if item is not None:

items.append(item)

if not items:

return None

xs,ys = zip(*tuple(items))

return np.stack(xs), np.stack(ys)sample_imgs = imgs

sample_labels = labels

sample_sg = segm_generator(sample_imgs, sample_labels, imgs.shape[0])

sample_out = sample_sg.__next__()

sample_x, sample_y = sample_out

print('>>> Sample Shape X: {}, y: {}'.format(sample_x.shape, sample_y.shape))

print('>>> Exist Waldo: {}'.format(np.any(sample_y[0][:,1]==1)))

plt.figure(figsize=(2, 2))

plt.title("Sampe Shape: {}".format(sample_x[0].shape))

plt.imshow(sample_x[0])>>> Sample Shape X: (18, 224, 224, 3), y: (18, 50176, 2)

>>> Exist Waldo: False

<matplotlib.image.AxesImage at 0x1d86bc92508>

sample_imgs = waldo_sub_imgs

sample_labels = waldo_sub_labels

sample_sg = segm_generator(sample_imgs, sample_labels, imgs.shape[0])

sample_out = sample_sg.__next__()

sample_x, sample_y = sample_out

print('>>> Sample Shape X: {}, y: {}'.format(sample_x.shape, sample_y.shape))

print('>>> Exist Waldo: {}'.format(np.any(sample_y[0][:,1]==1)))

plt.figure(figsize=(2, 2))

plt.title("Sampe Shape: {}".format(sample_x[0].shape))

plt.imshow(sample_x[0])>>> Sample Shape X: (18, 224, 224, 3), y: (18, 50176, 2)

>>> Exist Waldo: True

<matplotlib.image.AxesImage at 0x1d830bbb148>

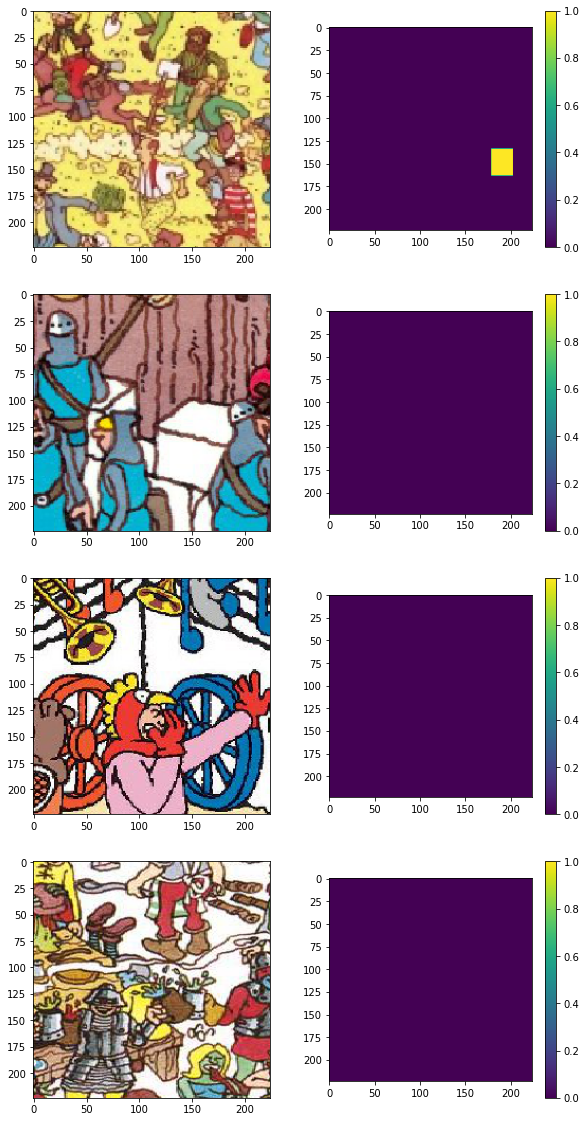

배치 사이즈 4에 34% 비율(월리 이미지가 있는 비율)로 랜덤으로 이미지를 크롭하여 생성해보자

# waldo : not_waldo = 1 : 2 (0.34)

gen_mix = seg_gen_mix(waldo_sub_imgs, waldo_sub_labels, imgs, labels, tot_bs=4, prop=0.34, out_sz=(PANNEL_SIZE, PANNEL_SIZE))

X, y = next(gen_mix)

plt.figure(figsize=(10, 20))

for i, img in enumerate(X):

plt.subplot(X.shape[0], 2, 2*i+1)

plt.imshow(X[i])

plt.subplot(X.shape[0], 2, 2*i+2)

plt.colorbar()

plt.imshow(y[i][:,1].reshape((PANNEL_SIZE, PANNEL_SIZE)))

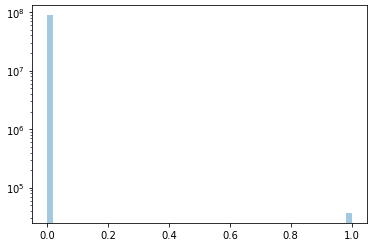

학습 데이터셋이 월리가 없는 이미지가 월리가 있는 이미지보다 많기 때문에 (학습의 불균형) 학습이 잘 진행되지 않을 수도 있다.

이러한 문제에 대하여 효과적으로 학습을 해결하기 위하여 모델 학습시 class_weight를 설정해주도록 한다.

쉽게 이야기하여 모델 학습시 불균형 데이터에 대하여 가중치를 더 두어서 학습 비율을 맞추어주는 과정이라고 생각하시면 될꺼 같다.

https://www.tensorflow.org/tutorials/structured_data/imbalanced_data?hl=ko

freq0 = np.sum(labels==0)

freq1 = np.sum(labels==1)

print(freq0, freq1)

sns.distplot(labels.flatten(), kde=False, hist_kws={'log':True})

sample_weights = np.zeros((6, PANNEL_SIZE * PANNEL_SIZE, 2))

sample_weights[:,:,0] = 1. / freq0

sample_weights[:,:,1] = 1.

plt.subplot(1,2,1)

plt.imshow(sample_weights[0,:,0].reshape((224, 224)))

plt.colorbar()

plt.subplot(1,2,2)

plt.imshow(sample_weights[0,:,1].reshape((224, 224)))

plt.colorbar()<matplotlib.colorbar.Colorbar at 0x24d3f3b3248>

def build_model():

inputs = layers.Input(shape=(PANNEL_SIZE, PANNEL_SIZE, 3))

net = layers.Conv2D(64, kernel_size=3, padding='same')(inputs)

net = layers.LeakyReLU()(net)

net = layers.MaxPool2D(pool_size=2)(net)

shortcut_1 = net

net = layers.Conv2D(128, kernel_size=3, padding='same')(net)

net = layers.LeakyReLU()(net)

net = layers.MaxPool2D(pool_size=2)(net)

shortcut_2 = net

net = layers.Conv2D(256, kernel_size=3, padding='same')(net)

net = layers.LeakyReLU()(net)

net = layers.MaxPool2D(pool_size=2)(net)

shortcut_3 = net

net = layers.Conv2D(256, kernel_size=1, padding='same')(net)

net = layers.LeakyReLU()(net)

net = layers.MaxPool2D(pool_size=2)(net)

net = layers.UpSampling2D(size=2)(net)

net = layers.Conv2D(256, kernel_size=3, padding='same')(net)

net = layers.Activation('relu')(net)

net = layers.Add()([net, shortcut_3])

net = layers.UpSampling2D(size=2)(net)

net = layers.Conv2D(128, kernel_size=3, padding='same')(net)

net = layers.Activation('relu')(net)

net = layers.Add()([net, shortcut_2])

net = layers.UpSampling2D(size=2)(net)

net = layers.Conv2D(64, kernel_size=3, padding='same')(net)

net = layers.Activation('relu')(net)

net = layers.Add()([net, shortcut_1])

net = layers.UpSampling2D(size=2)(net)

net = layers.Conv2D(2, kernel_size=1, padding='same')(net)

net = layers.Reshape((-1, 2))(net)

net = layers.Activation('softmax')(net)

model = Model(inputs=inputs, outputs=net)

model.compile(

loss='categorical_crossentropy',

optimizer=optimizers.Adam(),

metrics=['acc'],

sample_weight_mode='temporal'

)

return modelmodel = build_model()

model.summary()Model: "model_1"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) (None, 224, 224, 3) 0

__________________________________________________________________________________________________

conv2d_1 (Conv2D) (None, 224, 224, 64) 1792 input_1[0][0]

__________________________________________________________________________________________________

leaky_re_lu_1 (LeakyReLU) (None, 224, 224, 64) 0 conv2d_1[0][0]

__________________________________________________________________________________________________

max_pooling2d_1 (MaxPooling2D) (None, 112, 112, 64) 0 leaky_re_lu_1[0][0]

__________________________________________________________________________________________________

conv2d_2 (Conv2D) (None, 112, 112, 128 73856 max_pooling2d_1[0][0]

__________________________________________________________________________________________________

leaky_re_lu_2 (LeakyReLU) (None, 112, 112, 128 0 conv2d_2[0][0]

__________________________________________________________________________________________________

max_pooling2d_2 (MaxPooling2D) (None, 56, 56, 128) 0 leaky_re_lu_2[0][0]

__________________________________________________________________________________________________

conv2d_3 (Conv2D) (None, 56, 56, 256) 295168 max_pooling2d_2[0][0]

__________________________________________________________________________________________________

leaky_re_lu_3 (LeakyReLU) (None, 56, 56, 256) 0 conv2d_3[0][0]

__________________________________________________________________________________________________

max_pooling2d_3 (MaxPooling2D) (None, 28, 28, 256) 0 leaky_re_lu_3[0][0]

__________________________________________________________________________________________________

conv2d_4 (Conv2D) (None, 28, 28, 256) 65792 max_pooling2d_3[0][0]

__________________________________________________________________________________________________

leaky_re_lu_4 (LeakyReLU) (None, 28, 28, 256) 0 conv2d_4[0][0]

__________________________________________________________________________________________________

max_pooling2d_4 (MaxPooling2D) (None, 14, 14, 256) 0 leaky_re_lu_4[0][0]

__________________________________________________________________________________________________

up_sampling2d_1 (UpSampling2D) (None, 28, 28, 256) 0 max_pooling2d_4[0][0]

__________________________________________________________________________________________________

conv2d_5 (Conv2D) (None, 28, 28, 256) 590080 up_sampling2d_1[0][0]

__________________________________________________________________________________________________

activation_1 (Activation) (None, 28, 28, 256) 0 conv2d_5[0][0]

__________________________________________________________________________________________________

add_1 (Add) (None, 28, 28, 256) 0 activation_1[0][0]

max_pooling2d_3[0][0]

__________________________________________________________________________________________________

up_sampling2d_2 (UpSampling2D) (None, 56, 56, 256) 0 add_1[0][0]

__________________________________________________________________________________________________

conv2d_6 (Conv2D) (None, 56, 56, 128) 295040 up_sampling2d_2[0][0]

__________________________________________________________________________________________________

activation_2 (Activation) (None, 56, 56, 128) 0 conv2d_6[0][0]

__________________________________________________________________________________________________

add_2 (Add) (None, 56, 56, 128) 0 activation_2[0][0]

max_pooling2d_2[0][0]

__________________________________________________________________________________________________

up_sampling2d_3 (UpSampling2D) (None, 112, 112, 128 0 add_2[0][0]

__________________________________________________________________________________________________

conv2d_7 (Conv2D) (None, 112, 112, 64) 73792 up_sampling2d_3[0][0]

__________________________________________________________________________________________________

activation_3 (Activation) (None, 112, 112, 64) 0 conv2d_7[0][0]

__________________________________________________________________________________________________

add_3 (Add) (None, 112, 112, 64) 0 activation_3[0][0]

max_pooling2d_1[0][0]

__________________________________________________________________________________________________

up_sampling2d_4 (UpSampling2D) (None, 224, 224, 64) 0 add_3[0][0]

__________________________________________________________________________________________________

conv2d_8 (Conv2D) (None, 224, 224, 2) 130 up_sampling2d_4[0][0]

__________________________________________________________________________________________________

reshape_1 (Reshape) (None, 50176, 2) 0 conv2d_8[0][0]

__________________________________________________________________________________________________

activation_4 (Activation) (None, 50176, 2) 0 reshape_1[0][0]

==================================================================================================

Total params: 1,395,650

Trainable params: 1,395,650

Non-trainable params: 0

__________________________________________________________________________________________________

gen_mix = seg_gen_mix(waldo_sub_imgs, waldo_sub_labels, imgs, labels, tot_bs=6, prop=0.34, out_sz=(PANNEL_SIZE, PANNEL_SIZE))

def on_epoch_end(epoch, logs):

print('\r', 'Epoch:%5d - loss: %.4f - acc: %.4f' % (epoch, logs['loss'], logs['acc']), end='')

print_callback = LambdaCallback(on_epoch_end=on_epoch_end)history = model.fit_generator(

gen_mix,

steps_per_epoch=6,

epochs=500,

class_weight=sample_weights,

verbose=0,

callbacks=[

print_callback,

ReduceLROnPlateau(monitor='loss', factor=0.2, patience=100, verbose=1, mode='auto', min_lr=1e-05)

]

)Epoch: 499 - loss: 0.0042 - acc: 0.9982

model.save('model.h5')

plt.figure(figsize=(12, 4))

plt.subplot(1, 2, 1)

plt.title('loss')

plt.plot(history.history['loss'])

plt.subplot(1, 2, 2)

plt.title('accuracy')

plt.plot(history.history['acc'])[<matplotlib.lines.Line2D at 0x24d3eafd488>]

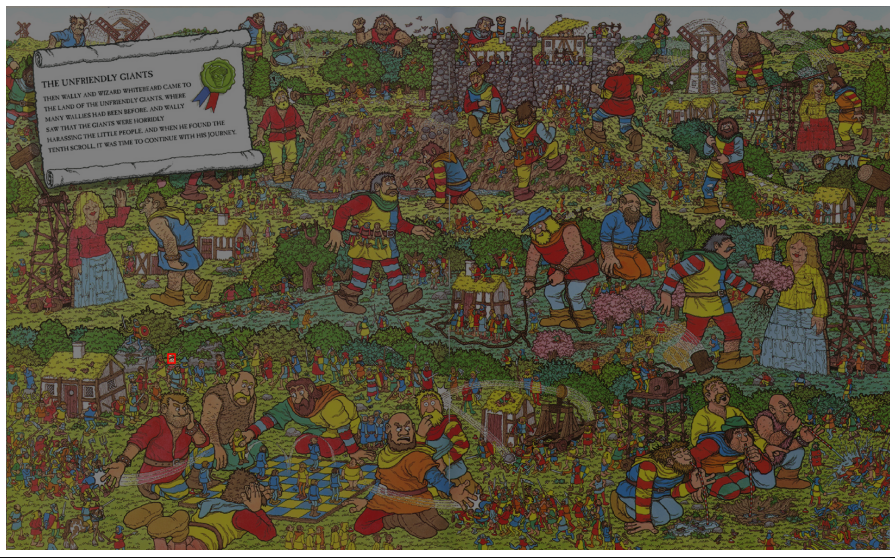

img_filename = '02.jpg'

test_img = np.array(Image.open(os.path.join('test_imgs', img_filename)).resize((2800, 1760), Image.NEAREST)).astype(np.float32) / 255.

plt.figure(figsize=(20, 10))

plt.imshow(test_img)<matplotlib.image.AxesImage at 0x24d3eb96f48>

def img_resize(img):

h, w, _ = img.shape

nvpanels = int(h/PANNEL_SIZE)

nhpanels = int(w/PANNEL_SIZE)

new_h, new_w = h, w

if nvpanels*PANNEL_SIZE != h:

new_h = (nvpanels+1)*PANNEL_SIZE

if nhpanels*PANNEL_SIZE != w:

new_w = (nhpanels+1)*PANNEL_SIZE

if new_h == h and new_w == w:

return img

else:

return resize(img, output_shape=(new_h, new_w), preserve_range=True)

def split_panels(img):

h, w, _ = img.shape

num_vert_panels = int(h/PANNEL_SIZE)

num_hor_panels = int(w/PANNEL_SIZE)

panels = []

for i in range(num_vert_panels):

for j in range(num_hor_panels):

panels.append(img[i*PANNEL_SIZE:(i+1)*PANNEL_SIZE,j*PANNEL_SIZE:(j+1)*PANNEL_SIZE])

return np.stack(panels)

def combine_panels(img, panels):

h, w, _ = img.shape

num_vert_panels = int(h/PANNEL_SIZE)

num_hor_panels = int(w/PANNEL_SIZE)

total = []

p = 0

for i in range(num_vert_panels):

row = []

for j in range(num_hor_panels):

row.append(panels[p])

p += 1

total.append(np.concatenate(row, axis=1))

return np.concatenate(total, axis=0)test_img = img_resize(test_img)

panels = split_panels(test_img)

out = combine_panels(test_img, panels)

print(panels.shape, test_img.shape, out.shape)(104, 224, 224, 3) (1792, 2912, 3) (1792, 2912, 3)

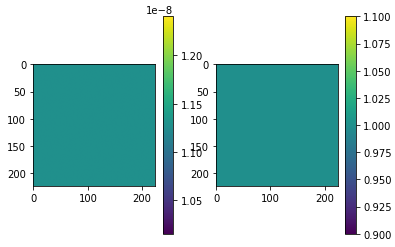

model = load_model('model.h5')

pred_panels = model.predict(panels).reshape((-1, PANNEL_SIZE, PANNEL_SIZE, 2))[:, :, :, 1]

pred_out = combine_panels(test_img, pred_panels)

# compute coordinates and confidence

argmax_x = np.argmax(np.max(pred_out, axis=0), axis=0)

argmax_y = np.argmax(np.max(pred_out, axis=1), axis=0)

confidence = np.amax(pred_out) * 100

print('(%s, %s) %.2f%%' % (argmax_x, argmax_y, confidence))

plt.figure(figsize=(20, 10))

plt.imshow(pred_out)

plt.colorbar()(548, 1168) 99.79%

<matplotlib.colorbar.Colorbar at 0x24d3f623348>

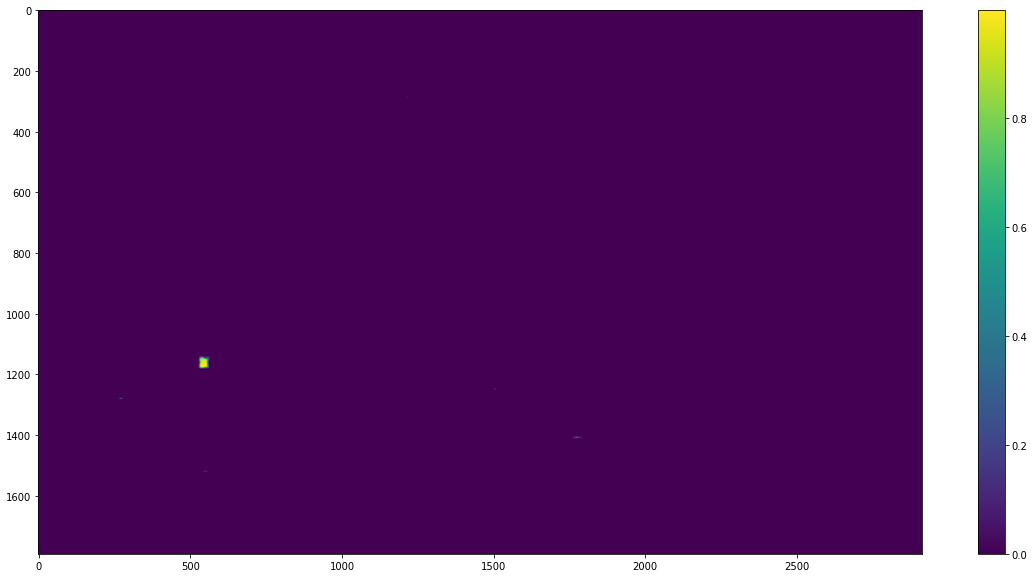

def bbox_from_mask(img):

rows = np.any(img, axis=1)

cols = np.any(img, axis=0)

y1, y2 = np.where(rows)[0][[0, -1]]

x1, x2 = np.where(cols)[0][[0, -1]]

return x1, y1, x2, y2

x1, y1, x2, y2 = bbox_from_mask((pred_out > 0.8).astype(np.uint8))

print(x1, y1, x2, y2)

# make overlay

overlay = np.repeat(np.expand_dims(np.zeros_like(pred_out, dtype=np.uint8), axis=-1), 3, axis=-1)

alpha = np.expand_dims(np.full_like(pred_out, 255, dtype=np.uint8), axis=-1)

overlay = np.concatenate([overlay, alpha], axis=-1)

overlay[y1:y2, x1:x2, 3] = 0

plt.figure(figsize=(20, 10))

plt.imshow(overlay)534 1146 557 1177

<matplotlib.image.AxesImage at 0x24d3f955b48>

fig, ax = plt.subplots(figsize=(20, 10))

ax.imshow(test_img)

ax.imshow(overlay, alpha=0.5)

rect = patches.Rectangle((x1, y1), width=x2-x1, height=y2-y1, linewidth=1.5, edgecolor='r', facecolor='none')

ax.add_patch(rect)

ax.set_axis_off()

'AI 인공지능 > Research' 카테고리의 다른 글

| 마스크 착용을 인식할 수 있을까? (0) | 2022.02.22 |

|---|---|

| 바코드, QR 코드 인식할 수 있을까? (0) | 2022.02.16 |

| 지문을 인식할 수 있을까? (0) | 2022.02.15 |

| 이미지 캡차를 인식할 수 있을까? (0) | 2022.02.14 |