728x90

반응형

설치 계획

- 하둡 3.3.0 버전 설치

| hadoop-001 서버 | hadoop-002 서버 | hadoop-003 서버 |

| JournalNode | JournalNode | JournalNode |

| NameNode | NameNode | |

| - | DataNode | DataNode |

| ResourceManager | NodeManager | NodeManager |

| JobHistoryServer | JobHistoryServer | |

| Zookeeper | Zookeeper | Zookeeper |

| Kafka | Kafka | Kafka |

사전 설정

1. hosts 파일 추가

마스터 노드와 데이터 노드를 hosts 파일에 추가해준다.

물리서버면 설정할 필요없습니다. 필자는 VirtualBox를 활용하여 테스트하였습니다.

# hadoop

20.200.0.110 hadoop-001

20.200.0.102 hadoop-002

20.200.0.103 hadoop-0032. ssh 공개키 생성 및 접속 설정

마스터 노드를 제외한 모든 데이터 노드 서버에도 ~/.ssh/authorized_keys 파일을 추가해준다.

hadoop@hadoop-001:~$ mkdir ~/.ssh/

hadoop@hadoop-001:~$ sudo ssh-keygen -t rsa -f ~/.ssh/id_rsa

Generating public/private rsa key pair.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/hadoop/.ssh/id_rsa

Your public key has been saved in /home/hadoop/.ssh/id_rsa.pub

The key fingerprint is:

SHA256:yCGThyuzJOWWysUBF5g+UYmEOrjhk4Gq/kunRTXrA/o hadoop@hadoop-001

The keys randomart image is:

+---[RSA 3072]----+

|oo=+o |

|.=o. o |

|= o.= oo |

|**...*.oo |

|++@o.oo.S |

|+O.+o o |

|o.oo o o |

|. . = . |

|...+.E |

+----[SHA256]-----+

hadoop@hadoop-001:~$ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

hadoop@hadoop-001:~$ chmod 0600 ~/.ssh/authorized_keys

hadoop@hadoop-001:~$ ls -al ~/.ssh/authorized_keys

-rw------- 1 hadoop hadoop 571 12월 17 14:23 /home/hadoop/.ssh/authorized_keys

hadoop@hadoop-001:~$ cat ~/.ssh/authorized_keys

ssh-rsa AAAAB3NzaC1yc...<생략>3. ssh 공개키 소유권 및 권한 변경

hadoop@hadoop-001:~$ sudo chown -R hadoop:hadoop ~/.ssh

hadoop@hadoop-001:~$ sudo chmod 600 ~/.ssh/id_rsa

hadoop@hadoop-001:~$ sudo chmod 644 ~/.ssh/id_rsa.pub

hadoop@hadoop-001:~$ sudo chmod 644 ~/.ssh/authorized_keys

hadoop@hadoop-001:~$ sudo chmod 644 ~/.ssh/known_hosts

설치 순서

1. OpenJDK 8 설치

hadoop@hadoop-001:~$ sudo apt-get install openjdk-8-jdk -y

[sudo] hadoop의 암호:

패키지 목록을 읽는 중입니다... 완료

의존성 트리를 만드는 중입니다

상태 정보를 읽는 중입니다... 완료

다음의 추가 패키지가 설치될 것입니다 :

ca-certificates-java fonts-dejavu-extra java-common libatk-wrapper-java libatk-wrapper-java-jni libice-dev libpthread-stubs0-dev libsm-dev libx11-dev libxau-dev libxcb1-dev libxdmcp-dev libxt-dev openjdk-8-jdk-headless openjdk-8-jre

openjdk-8-jre-headless x11proto-core-dev x11proto-dev xorg-sgml-doctools xtrans-dev

제안하는 패키지:

default-jre libice-doc libsm-doc libx11-doc libxcb-doc libxt-doc openjdk-8-demo openjdk-8-source visualvm icedtea-8-plugin fonts-ipafont-gothic fonts-ipafont-mincho fonts-wqy-microhei fonts-wqy-zenhei

다음 새 패키지를 설치할 것입니다:

ca-certificates-java fonts-dejavu-extra java-common libatk-wrapper-java libatk-wrapper-java-jni libice-dev libpthread-stubs0-dev libsm-dev libx11-dev libxau-dev libxcb1-dev libxdmcp-dev libxt-dev openjdk-8-jdk openjdk-8-jdk-headless

openjdk-8-jre openjdk-8-jre-headless x11proto-core-dev x11proto-dev xorg-sgml-doctools xtrans-dev

0개 업그레이드, 21개 새로 설치, 0개 제거 및 178개 업그레이드 안 함.

43.4 M바이트 아카이브를 받아야 합니다.

이 작업 후 162 M바이트의 디스크 공간을 더 사용하게 됩니다.

받기:1 http://kr.archive.ubuntu.com/ubuntu focal/main amd64 java-common all 0.72 [6,816 B]

받기:2 http://kr.archive.ubuntu.com/ubuntu focal-updates/universe amd64 openjdk-8-jre-headless amd64 8u292-b10-0ubuntu1~20.04 [28.2 MB]

...

libxt-dev:amd64 (1:1.1.5-1) 설정하는 중입니다 ...

hadoop@hadoop-001:~$ java -version

openjdk version "1.8.0_292"

OpenJDK Runtime Environment (build 1.8.0_292-8u292-b10-0ubuntu1~20.04-b10)

OpenJDK 64-Bit Server VM (build 25.292-b10, mixed mode)2. Hadoop 다운로드

hadoop@hadoop-001:~$ sudo curl -o /usr/local/hadoop-3.3.0.tar.gz https://downloads.apache.org/hadoop/common/hadoop-3.3.0/hadoop-3.3.0.tar.gz

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 477M 100 477M 0 0 2796k 0 0:02:54 0:02:54 --:--:-- 4997k

hadoop@hadoop-001:~$ sudo mkdir -p /usr/local/hadoop && sudo tar -xvzf /usr/local/hadoop-3.3.0.tar.gz -C /usr/local/hadoop --strip-components 1

...

hadoop-3.3.0/lib/

hadoop-3.3.0/lib/native/

hadoop-3.3.0/lib/native/libhadoop.a

...

hadoop@hadoop-001:~$ sudo rm -rf /usr/local/hadoop-3.3.0.tar.gz

hadoop@hadoop-001:~$ sudo chown -R $USER:$USER /usr/local/hadoop

hadoop@hadoop-001:~$ ls -al /usr/local/hadoop/

합계 116

drwxr-xr-x 10 hadoop hadoop 4096 12월 17 11:38 .

drwxr-xr-x 11 root root 4096 12월 17 11:43 ..

-rw-rw-r-- 1 hadoop hadoop 22976 7월 5 2020 LICENSE-binary

-rw-rw-r-- 1 hadoop hadoop 15697 3월 25 2020 LICENSE.txt

-rw-rw-r-- 1 hadoop hadoop 27570 3월 25 2020 NOTICE-binary

-rw-rw-r-- 1 hadoop hadoop 1541 3월 25 2020 NOTICE.txt

-rw-rw-r-- 1 hadoop hadoop 175 3월 25 2020 README.txt

drwxr-xr-x 2 hadoop hadoop 4096 7월 7 2020 bin

drwxr-xr-x 3 hadoop hadoop 4096 7월 7 2020 etc

drwxr-xr-x 2 hadoop hadoop 4096 7월 7 2020 include

drwxr-xr-x 3 hadoop hadoop 4096 7월 7 2020 lib

drwxr-xr-x 4 hadoop hadoop 4096 7월 7 2020 libexec

drwxr-xr-x 2 hadoop hadoop 4096 7월 7 2020 licenses-binary

drwxr-xr-x 3 hadoop hadoop 4096 7월 7 2020 sbin

drwxr-xr-x 4 hadoop hadoop 4096 7월 7 2020 share3. 환경변수 /etc/profile 등록

hadoop@hadoop-001:~$ sudo vi /etc/profile

# hadoop cluster

JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64

HADOOP_HOME=/usr/local/hadoop

HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

export JAVA_HOME HADOOP_HOME HADOOP_CONF_DIR

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:$HADOOP_HOME/lib/native

export PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin4. Hadoop 설정 파일

4-1. core-site.xml

$HADOOP_HOME/etc/hadoop/core-site.xml

hadoop@hadoop-001:~$ vi $HADOOP_HOME/etc/hadoop/core-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://hdfs-cluster</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/usr/local/hadoop/tmp</value>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<value>hadoop-001:2181,hadoop-002:2181,hadoop-003:2181</value>

</property>

</configuration>4-2. hdfs-site.xml

$HADOOP_HOME/etc/hadoop/hdfs-site.xml

hadoop@hadoop-001:~$ vi $HADOOP_HOME/etc/hadoop/hdfs-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>dfs.namenode.name.dir</name>

<value>/usr/local/hadoop/data/namenode</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/usr/local/hadoop/data/datanode</value>

</property>

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/usr/local/hadoop/journalnode</value>

</property>

<property>

<name>dfs.nameservices</name>

<value>hdfs-cluster</value>

</property>

<property>

<name>dfs.ha.namenodes.hdfs-cluster</name>

<value>nn1,nn2</value>

</property>

<property>

<name>dfs.namenode.rpc-address.hdfs-cluster.nn1</name>

<value>hadoop-001:8020</value>

</property>

<property>

<name>dfs.namenode.rpc-address.hdfs-cluster.nn2</name>

<value>hadoop-002:8020</value>

</property>

<property>

<name>dfs.namenode.http-address.hdfs-cluster.nn1</name>

<value>hadoop-001:9870</value>

</property>

<property>

<name>dfs.namenode.http-address.hdfs-cluster.nn2</name>

<value>hadoop-002:9870</value>

</property>

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://hadoop-001:8485;hadoop-002:8485;hadoop-003:8485/hdfs-cluster</value>

</property>

<property>

<name>dfs.client.failover.proxy.provider.hdfs-cluster</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/root/.ssh/id_rsa</value>

</property>

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<value>hadoop-001:2181,hadoop-002:2181,hadoop-003:2181</value>

</property>

</configuration>4-3. yarn-site.xml

$HADOOP_HOME/etc/hadoop/yarn-site.xml

<?xml version="1.0"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>hadoop-001</value>

</property>

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>

<property>

<name>yarn.application.classpath</name>

<value>

/usr/local/hadoop/share/hadoop/mapreduce/*,

/usr/local/hadoop/share/hadoop/mapreduce/lib/*,

/usr/local/hadoop/share/hadoop/common/*,

/usr/local/hadoop/share/hadoop/common/lib/*,

/usr/local/hadoop/share/hadoop/hdfs/*,

/usr/local/hadoop/share/hadoop/hdfs/lib/*,

/usr/local/hadoop/share/hadoop/yarn/*,

/usr/local/hadoop/share/hadoop/yarn/lib/*

</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>hadoop-001:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>hadoop-001:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>hadoop-001:8031</value>

</property>

<property>

<name>yarn.resourcemanager.zk-address</name>

<value>hadoop-001:2181,hadoop-002:2181,hadoop-003:2181</value>

</property>

</configuration>4-4. mapred-site.xml

$HADOOP_HOME/etc/hadoop/mapred-site.xml

hadoop@hadoop-001:~$ vi $HADOOP_HOME/etc/hadoop/mapred-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>yarn.app.mapreduce.am.env</name>

<value>HADOOP_MAPRED_HOME=${HADOOP_HOME}</value>

</property>

<property>

<name>mapreduce.map.env</name>

<value>HADOOP_MAPRED_HOME=${HADOOP_HOME}</value>

</property>

<property>

<name>mapreduce.reduce.env</name>

<value>HADOOP_MAPRED_HOME=${HADOOP_HOME}</value>

</property>

</configuration>4-5. workers

$HADOOP_HOME/etc/hadoop/workers

hadoop@hadoop-001:~$ vi $HADOOP_HOME/etc/hadoop/workers

hadoop-002

hadoop-0034-6. hadoop-env.sh

$HADOOP_HOME/etc/hadoop/hadoop-env.sh

hadoop@hadoop-001:~$ vi $HADOOP_HOME/etc/hadoop/hadoop-env.sh

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64

HADOOP_HOME=${HADOOP_HOME:-/usr/local/hadoop}

HADOOP_CONF_DIR=${HADOOP_CONF_DIR:-$HADOOP_HOME/etc/hadoop}

export HADOOP_PID_DIR=${HADOOP_HOME}/pids

export HADOOP_SECURE_DN_PID_DIR=${HADOOP_PID_DIR}5. 기동 쉘 스크립트 작성

#!/bin/bash

### BEGIN INIT INFO

# Provides: hadoop

# Required-Start: $all

# Required-Stop:

# Default-Start: 2 3 4 5

# Default-Stop:

# Short-Description: hadoop service

### END INIT INFO

HADOOP_HOME=${HADOOP_HOME:-/usr/local/hadoop}

HADOOP_PID_DIR=${HADOOP_PID_DIR:-${HADOOP_HOME}/pids}

HADOOP_PID_DATANODE_FILE=$HADOOP_PID_DIR/hadoop-hadoop-datanode.pid

case "$1" in

node_start)

PID_FILE="$HADOOP_PID_DIR/hadoop-hadoop-$2.pid"

echo -n "Starting node: "

if [ -f $PID_FILE ] ; then

echo "Already started"

exit 3

else

if [ "$3" != "" ] ; then

$HADOOP_HOME/bin/hdfs $2 $3

$HADOOP_HOME/bin/hdfs --daemon start $2

else

$HADOOP_HOME/bin/hdfs --daemon start $2

fi

echo "done."

exit 0

fi

;;

node_stop)

PID_FILE="$HADOOP_PID_DIR/hadoop-hadoop-$2.pid"

echo -n "Stopping node: "

if [ -f $PID_FILE ] ; then

$HADOOP_HOME/bin/hdfs --daemon stop $2

else

echo "Already stopped"

exit 3

fi

;;

node_status)

PID_FILE="$HADOOP_PID_DIR/hadoop-hadoop-$2.pid"

if [ -f $PID_FILE ] ; then

PID=`cat "$PID_FILE" 2>/dev/null`

echo "Running $PID"

exit 0

else

echo "Stopped"

exit 3

fi

;;

node_restart)

$0 node_stop

sleep 2

$0 node_start

;;

init)

rm -rf $HADOOP_HOME/data/namenode/*

# Zookeeper Failover Controller

$HADOOP_HOME/bin/hdfs zkfc -formatZK

$HADOOP_HOME/bin/hdfs namenode -format -force

$HADOOP_HOME/bin/hdfs namenode -initializeSharedEdits

;;

start)

echo -n "Starting hadoop: "

if [ -f $HADOOP_PID_DATANODE_FILE ] ; then

echo "Already started"

exit 3

else

$HADOOP_HOME/sbin/start-dfs.sh

$HADOOP_HOME/sbin/start-yarn.sh

$HADOOP_HOME/bin/mapred --daemon start historyserver

echo "done."

exit 0

fi

;;

stop)

echo -n "Stopping hadoop: "

if [ -f $HADOOP_PID_DATANODE_FILE ] ; then

$HADOOP_HOME/sbin/stop-dfs.sh

$HADOOP_HOME/sbin/stop-yarn.sh

$HADOOP_HOME/bin/mapred --daemon stop historyserver

echo "done."

exit 0

else

echo "Already stopped"

exit 3

fi

;;

status)

/usr/bin/jps

echo '------------------------------------------'

$HADOOP_HOME/bin/hdfs haadmin -getAllServiceState

;;

restart)

$0 stop

sleep 2

$0 start

;;

*)

echo "Usage: $0 {init|start|stop|status|restart|node_start|node_stop|node_status|node_restart}"

exit 1

;;

esac6. 작성한 스크립트 권한 부여

hadoop@hadoop-001:~# sudo chmod 755 /etc/init.d/hadoop

실행 / 중지

1. 초기 설정

hadoop-001 에서만 초기 설정을 실행한다.

hadoop@hadoop-001:~$ /etc/init.d/hadoop init2. Journalnode 실행

각 서버 전부 journalnode를 실행한다.

hadoop@hadoop-001:~$ /etc/init.d/hadoop node_start journalnode

hadoop@hadoop-002:~$ /etc/init.d/hadoop node_start journalnode

hadoop@hadoop-003:~$ /etc/init.d/hadoop node_start journalnode3. Active Namenode 실행

hadoop-001 에서 실행한다.

hadoop@hadoop-001:~$ /etc/init.d/hadoop node_start namenode4. Standby Namenode 실행

hadoop-002 에서 실행한다.

hadoop@hadoop-002:~$ /etc/init.d/hadoop node_start namenode -bootstrapStandby5. Datanode 및 기타 실행

hadoop-001 에서만 초기 설정을 실행한다.

hadoop@hadoop-001:~$ /etc/init.d/hadoop start6. 상태

hadoop@hadoop-001:~$ /etc/init.d/hadoop status

14450 DFSZKFailoverController

15189 Jps

11541 JournalNode

13798 NameNode

637 QuorumPeerMain

14701 JobHistoryServer

14575 ResourceManager

------------------------------------------

hadoop-001:8020 active

hadoop-002:8020 standby

웹 관리

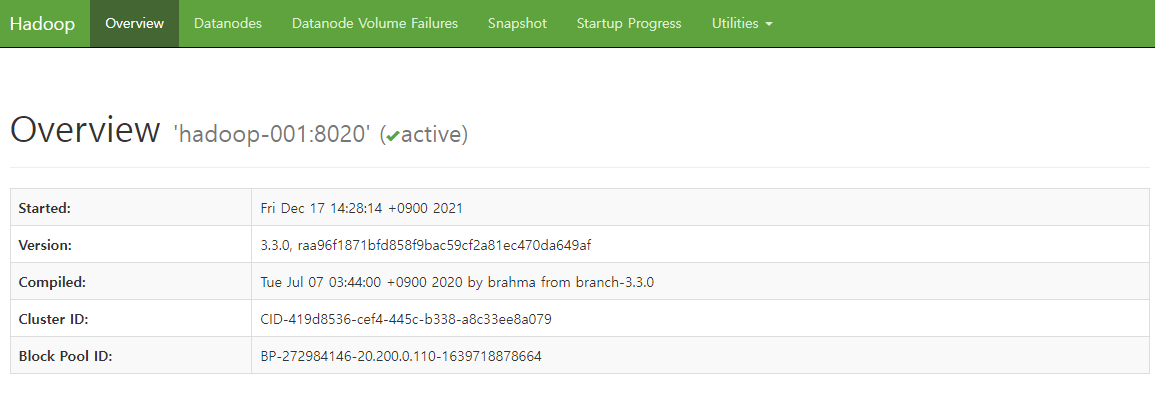

NameNode

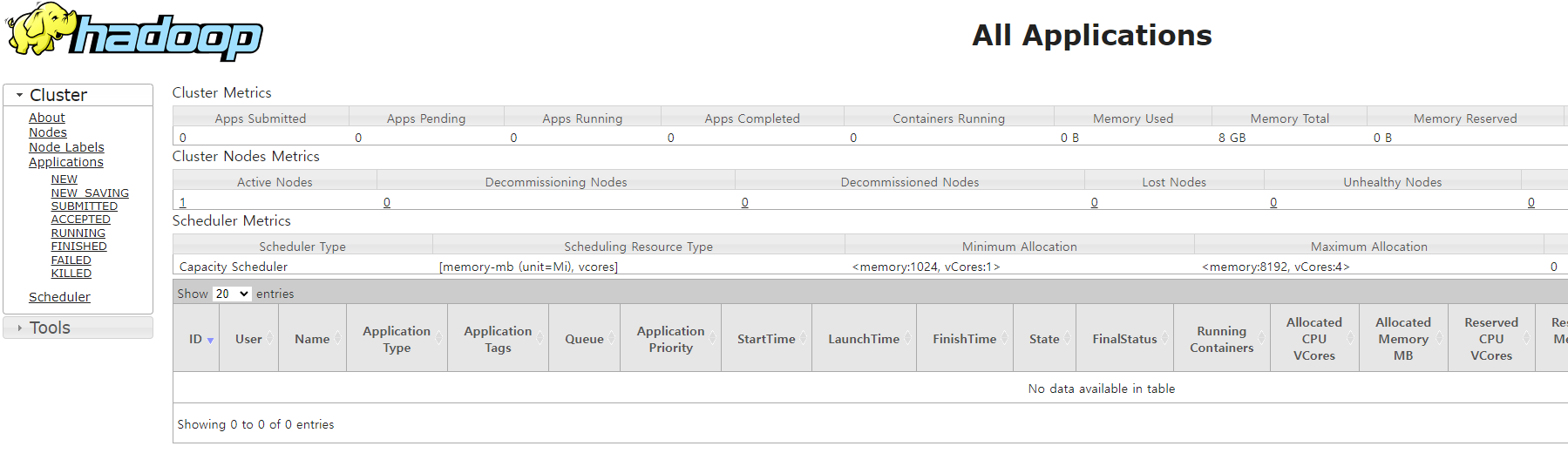

ResourceManager

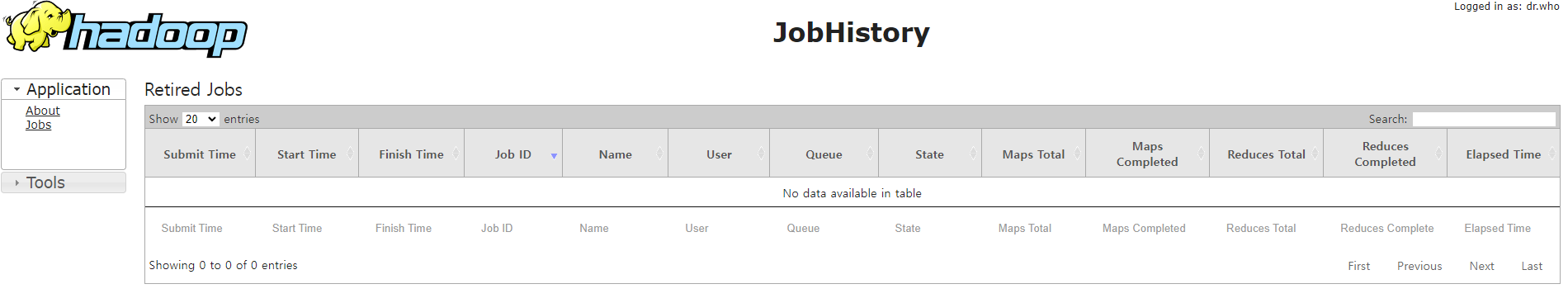

MapReduce JobHistory

728x90

반응형

'DevOps > Hadoop' 카테고리의 다른 글

| Hadoop - Hive (하이브) 설치 (0) | 2021.12.24 |

|---|---|

| Hadoop 클러스터 설치 (고가용성) - 1 (0) | 2021.12.20 |

| Hadoop 클러스터 설치 (기본) (0) | 2021.12.17 |

| 하둡 (hadoop) 이란? (0) | 2021.12.17 |